[WEB AR] Chinatown History in LA

Please watch the video for the full on-site AR experience

Position: Lead Developer (Web, AR)

- Project: Chinatown AR Experience

- Sponsor: NEH Foundation

- Location: Huntington, LA Metro

- Date: August 2023 - present

Team: USC Mobile & Environmental Media Lab (Led by Professor Scott Fisher)

- Directed cross-functional teams consisting of 10+ developers, designers, and producers.

- Actively coordinated with 8th Wall employees for technical solutions and bug reporting.

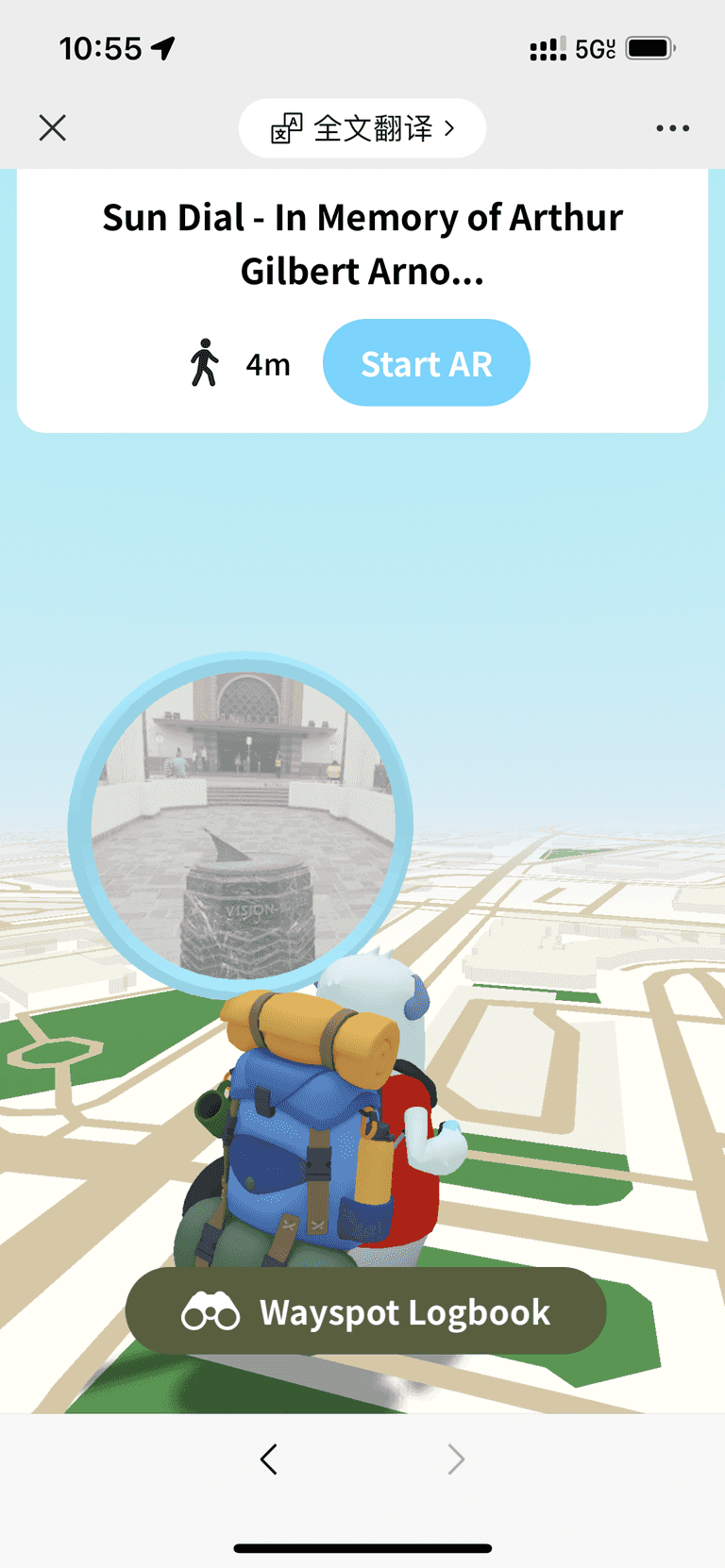

Venue: Union Station, Los Angeles Chinatown

Type: Web-based Augmented Reality Website

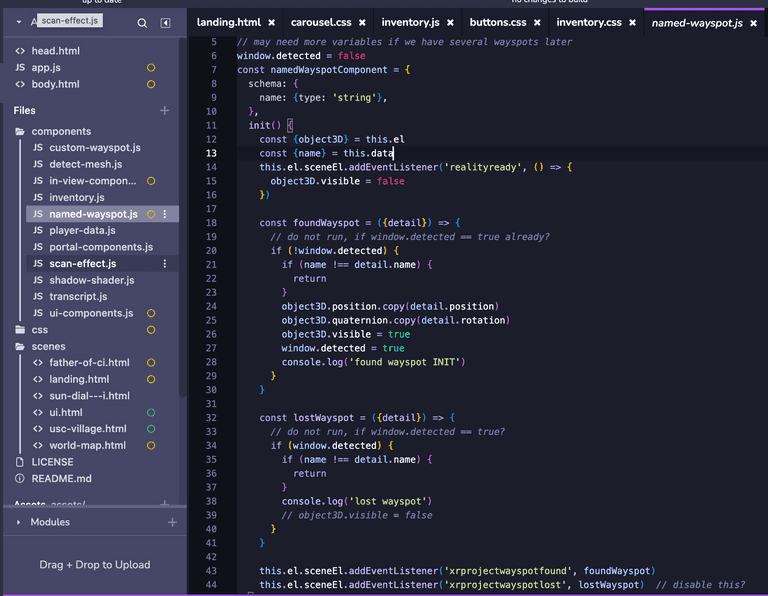

Tools:

- 8th Wall

- AFRAME API

- Vanilla Javascript

- Blender

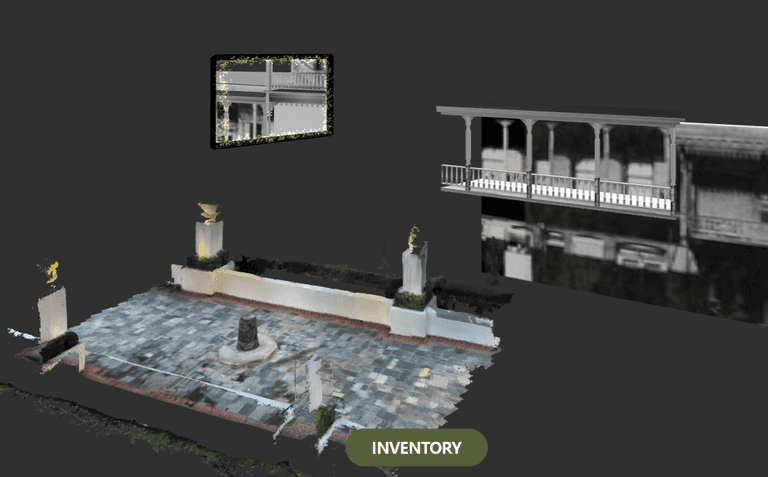

Environment Construction:

- Designed an 8th Wall Augmented Reality website spanning 5+ pages with scene transitions.

- Integrated the narrative of Chinatown history into the AR experience.

- Used the Visual Positioning System based on Aframe and 8th Wall API.

Visual Effects:

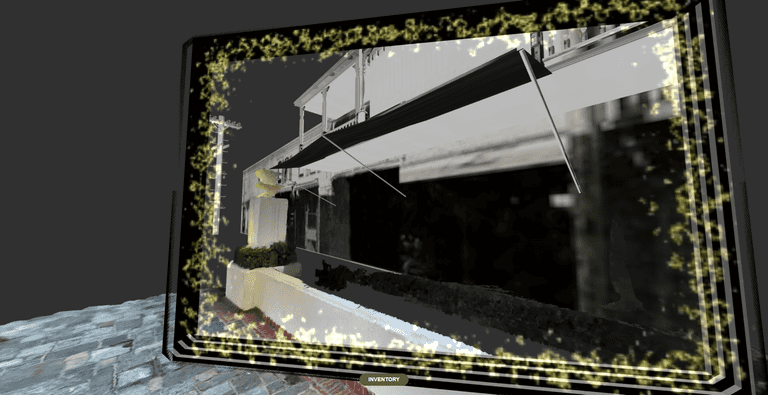

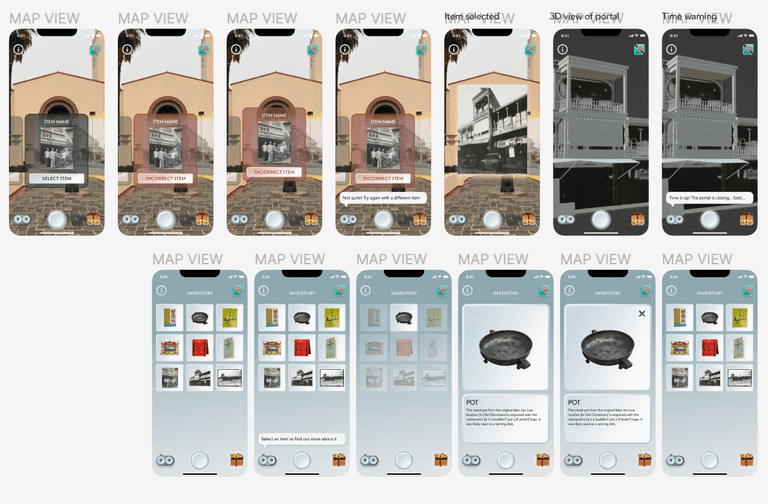

- Developed occlusion effects for the portal and embedded 3D models.

- Implemented UI/UX design, curating 5+ scene transitions between user menus and AR scenarios.

Player Interaction:

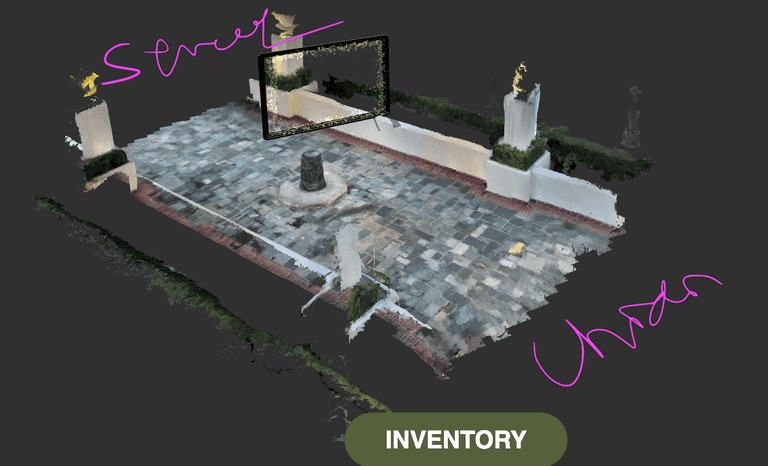

- Developed AR features, inventory management, and scenes using 8th Wall and AFRAME API.

- Incorporated camera raycasting for enhanced AR interactivity.

- Engineered a system to check the camera frustum for AR objects.

- Created a proximity detection system with the target to refine user experience during portal interactions.

Infrastructure:

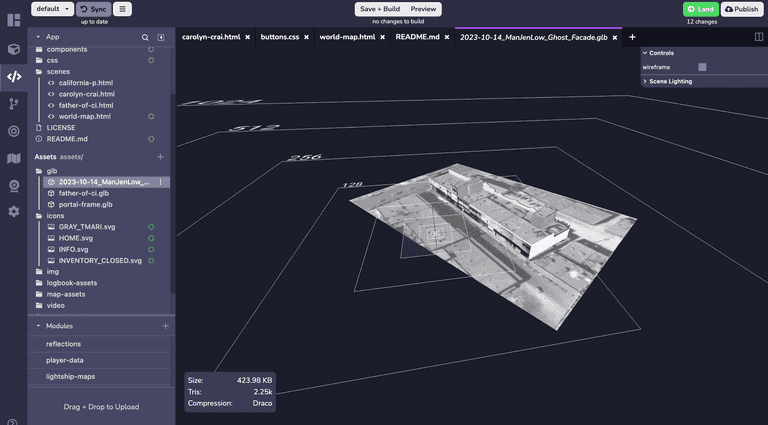

- Guided the optimization process alongside 3 designers to refine GLB/GLTF models, focusing on texture management and Draco compression.

3D modeling integration

- Used the metric reflecting the real distance relative to the wayspot

- Measured the entire LA UNION STATION sundial wayspot venue

- To make sure the AR 3D model to spawn at the desired location when the users scan the wayspot

- Optimization for web, app, XR

-

- Initially, the model's filesize was too big to be used in web

-

- Troubleshooted if it were Rhino problem, or mesh or texture issues

-

- Rhino uses NURBS, Maya and Blender you can use Polygon instead NURBS

-

- Texture file size was too big. The ideal or maximum size of texture would be 1024x1024.

-

- Texture file format: png to jpg. Reduce filesize

-

- Upload the entire 3D model with UV mapped to gltf.report website which allows you to optimize texture size as well as 3D modeling compression, using draco technology developed by google.

-

- 8th wall only supports glb 3D file format, which is preferred in most of web-based 3D assets even in other libraries such as threejs.

- You can use draco compression on glb, gltf file format.

- Maya doesn't support glb/gltf export, so we had to ultimately use Blender as the last part of 3D pipeline.

- 8th Wall / A-frame API: simple way to integrate 3D or webVR into the website. A-frame is an eaiser wrapper library of threejs.

- draco:

gltf-model="dracoDecoderPath: https://cdn.8thwall.com/web/aframe/draco-decoder/

-

// loading in the beginning of the scene.

<a-scene

landing-page

activate-portal

vps-coaching-overlay

background="color: #303030"

renderer="colorManagement: true"

gltf-model="dracoDecoderPath: https://cdn.8thwall.com/web/aframe/draco-decoder/"

xrextras-runtime-error

xrextras-loading

ui-component="currentScene: mobileSundial;"

xrweb="enableVps: true; allowedDevices: any">

<a-assets>

<a-asset-item id="vps-mesh" src="../assets/glb/sun-dial---i.glb"></a-asset-item>

<a-asset-item

id="men-jen-low"

src="../assets/glb/2023-10-11_MenJenLowBlock_Frame_Without_extrusion-draco.glb"></a-asset-item>

<a-asset-item id="portal-frame" src="../assets/glb/portal-frame.glb"></a-asset-item>

<img id="holo-placeholder-img" src="../assets/img/DavidSilhouette.png" />

<video

id="portal-video"

muted

autoplay

playsinline

crossorigin="anonymous"

loop="true"

src=".././assets/video/manjenlow_monet_2.mp4"></video>

<img id="skybox-img" src="../assets/img/skybox.jpg" />

<img id="blob-shadow-img" src="../assets/img/blob-shadow.png" />

<img id="men-jen-low-img" src="../assets/img/manjenlowCafe.jpg" />

</a-assets>// After you scan sun-dial, all the a-entity tags inside this will appear

<a-entity id="VPSMesh" named-wayspot="name: sun-dial---i">

<!-- Made MJL model transparent in the beginning. -->

<a-entity

id="ghostBuilding"

gltf-model="#men-jen-low"

model-transparent="opacity: 0.3">

</a-entity>

<!-- all other AR objects you want to spawn -->

<!-- Inside the portal. Same building without transparency -->

<a-entity

id="men-jen-low"

gltf-model="#men-jen-low"

reflections="type: realtime"

shadow="receive: false">

</a-entity>

</a-entity>